In the world of data analysis and artificial intelligence, natural language processing (NLP) is a key technique used to enhance the ability of machines to understand and interact with human language. NLP uses a range of tools and techniques to analyze, understand and generate natural language.

One approach to NLP is through the use of machine learning techniques. These techniques are used to develop models that can recognize patterns in natural language, allowing machines to identify important concepts and structure in written or spoken language. By training machines to recognize patterns, algorithms can be developed to understand and interpret natural language in a similar way to how humans understand language.

Another approach to NLP is through the use of linguistics. Linguistic approaches focus on studying the grammatical structure and syntax of language in order to better understand its meaning. With this approach, machines can employ complex grammatical rules to determine the meaning of words, phrases and sentences.

Overall, NLP is an important tool that enables machines to understand and interpret natural language. By employing a range of techniques and approaches, machines can be taught to understand language in a way that is similar to how humans do. This has the potential to revolutionize the way we interact with machines and can greatly enhance their ability to communicate with us in a meaningful way.

What algorithms are used in NLP?

There are several algorithms used in NLP to process and analyze natural language data. Here are a few examples:

1. Naive Bayes: This algorithm is used for text classification tasks such as sentiment analysis, spam filtering, and topic classification. It calculates the probability of a particular text belonging to a certain category based on the occurrence of words within that text.

2. Hidden Markov Models (HMMs): HMMs are widely used for speech recognition tasks, language modeling, and part-of-speech tagging. HMMs model sequences of observations (in this case, words) as a chain of hidden states that generate those observations.

3. Conditional Random Fields (CRFs): CRFs are used for sequence labeling tasks such as named entity recognition and chunking. They model the probability of each label for each word, taking into account the context of the surrounding words.

4. Neural Networks: Deep learning techniques such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are increasingly popular for NLP tasks. CNNs are often used for tasks such as document classification, while RNNs are used for tasks such as language modeling and machine translation.

Overall, the choice of algorithm depends on the specific NLP task at hand, as well as the nature of the data being analyzed. Different algorithms have different strengths and weaknesses, and often a combination of algorithms is used to achieve the best results.

What is happening in NLP today?

As the field of NLP continues to evolve, new techniques and approaches are emerging to enable machines to better understand natural language. One such approach is the use of deep learning techniques, which have proven to be successful in several NLP applications. Deep learning involves training artificial neural networks to recognize patterns in data, and has been particularly successful with text data. Through the use of deep neural networks, machines can learn to identify and extract important information from text, such as sentiment or topic information.

Another important technique in NLP is the use of advanced linguistic models, such as transformer models. These models are capable of modeling the context in which words are used, enabling machines to disambiguate between different meanings of words based on their context. Such models have been used successfully in several NLP applications, including language translation and text summarization.

In addition to these techniques, platforms such as Google’s BERT (Bidirectional Encoder Representations from Transformers) have emerged as powerful tools in NLP.

BERT is a pre-trained deep neural network that is capable of understanding the context of words in a sentence, and has been shown to outperform previous NLP techniques on several benchmarks.

Ultimately, the success of NLP techniques depends on the availability of high-quality training data. As more and more data becomes available, and techniques and approaches continue to improve, the potential of NLP to revolutionize the way we interact with machines and understand language is rapidly becoming a reality. With the ability to process and interpret vast amounts of natural language data, machines are poised to transform industries ranging from healthcare to finance, and the possibilities are virtually endless.

Preprocessing and Text Cleaning in NLP

In the realm of natural language processing, effective preprocessing and text cleaning are fundamental steps to prepare textual data for analysis and modeling. These techniques and approaches of NLP ensure that the data is in a usable and standardized format. In this section, we will delve into the key preprocessing steps involved in text cleaning.

Text Tokenization

Text tokenization is the process of breaking down a text into smaller units, typically words or phrases, known as tokens.

- Word Tokenization: In this approach, text is divided into individual words or tokens. Word tokenization is a common technique in NLP.

- Sentence Tokenization: Text can be split into sentences, which is valuable for tasks like text summarization or machine translation.

Stopword Removal

Stopwords are common words like “the,” “and,” “is,” etc., that often carry little meaning. Removing them can reduce data dimensionality. NLP practitioners use predefined lists of stopwords and remove them from the text data during preprocessing.

Stemming and Lemmatization

Stemming and lemmatization aim to reduce words to their base or root forms. Stemming removes prefixes or suffixes from words to obtain their root form, but it may result in non-words. Lemmatization, on the other hand, considers the word’s context and returns a valid word from the language’s dictionary.

Noise Removal

Noise in text data can include special characters, HTML tags, or inconsistent formatting. Punctuation, symbols, and non-alphanumeric characters can be removed to ensure clean text. In web data, HTML tags are often present and need to be stripped from the text.

Handling Missing Data

In real-world scenarios, text data might contain missing or incomplete information. Techniques such as imputing missing data with placeholders or contextually relevant information are used to handle missing values.

Text Representation in Techniques and Approaches of NLP

Text representation is a crucial aspect of natural language processing (NLP) that involves converting textual data into a format suitable for analysis and machine learning. Various techniques and approaches are employed to represent text effectively.

Term Frequency-Inverse Document Frequency (TF-IDF)

TF-IDF is a text representation technique that considers the importance of words in a document relative to their occurrence in a corpus.TF-IDF combines term frequency (TF), which measures the frequency of a word in a document, and inverse document frequency (IDF), which measures how unique a word is across a collection of documents. Documents are represented as vectors where each dimension corresponds to a term, and the value is computed using TF-IDF scores.

Bag of Words (BoW)

The Bag of Words model is a foundational technique in NLP for text representation. BoW represents text as an unordered collection of words or tokens, ignoring grammar and word order. Each document is transformed into a vector, where each dimension represents a unique word, and the value is the word’s frequency in the document.

Word Embeddings (Word2Vec, GloVe)

Word embeddings are techniques that represent words as dense vectors in a continuous vector space. Word2Vec and GloVe are methods that capture semantic relationships between words. Words with similar meanings are located closer to each other in the vector space. Words are represented as dense vectors, allowing for semantic understanding and similarity calculations.

Document-Term Matrix (DTM)

The Document-Term Matrix is another approach to text representation. DTM represents documents as rows and terms as columns, with values indicating the term frequency in each document. Each document is transformed into a vector based on the frequency of terms, enabling document similarity and clustering.

Character-level Embeddings

Character-level embeddings represent text at the level of individual characters. Instead of words, this approach considers characters, allowing for the representation of out-of-vocabulary words and languages with complex morphology. Characters are encoded as vectors, and words or documents are represented as sequences of character vectors.

NLP Models and Algorithms in Techniques and Approaches of NLP

NLP models and algorithms are the heart of natural language processing (NLP) and serve as the foundation for many NLP applications. In this section, we will explore the key models and algorithms used in NLP, highlighting their significance in various techniques and approaches of NLP.

Rule-Based Approaches

Rule-based NLP approaches rely on explicitly defined linguistic rules and patterns.

Syntax and Grammar Rules: These rules define the structure of sentences and the relationships between words, enabling tasks like part-of-speech tagging and parsing.

Named Entity Recognition (NER): Rule-based systems can identify named entities such as names, dates, and locations using predefined patterns.

Machine Learning Models

Machine learning models have played a pivotal role in NLP, enabling various tasks through data-driven approaches.

- Naive Bayes: Naive Bayes classifiers are used for tasks like text classification and sentiment analysis.

- Support Vector Machines (SVM): SVMs are employed for tasks such as text classification, named entity recognition, and information retrieval.

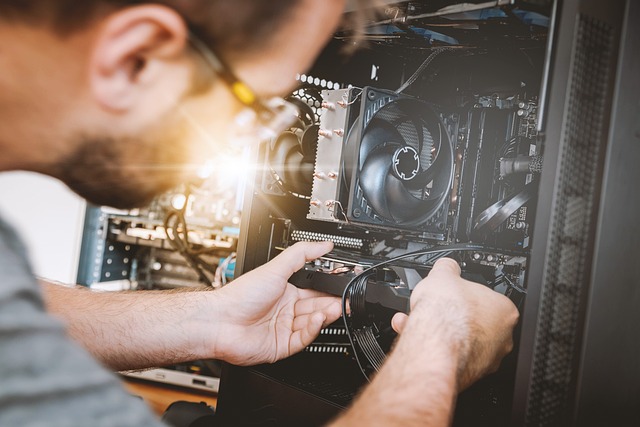

Neural Networks

Neural networks have revolutionized NLP, allowing for more complex and nuanced language understanding.

- Recurrent Neural Networks (RNN): RNNs are used for sequence modeling and tasks like machine translation, speech recognition, and sentiment analysis.

- Convolutional Neural Networks (CNN): CNNs are applied to tasks like text classification, document summarization, and image captioning.

Transformer Models

Transformer models have reshaped NLP and set new standards for understanding and generating natural language.

- BERT (Bidirectional Encoder Representations from Transformers): BERT has achieved state-of-the-art results in tasks like question answering and text classification.

- GPT (Generative Pre-trained Transformer): GPT models have excelled in text generation tasks, including content creation and language translation.

- T5 (Text-to-Text Transfer Transformer): T5 models are versatile and can be fine-tuned for a wide range of NLP tasks, including summarization and text generation.

Pretrained Language Models

Pretrained language models have gained popularity for their ability to transfer knowledge from large corpora of text to specific NLP tasks. Researchers and practitioners fine-tune pretrained models like GPT-3 and BERT for specific NLP tasks, saving time and resources. Multilingual pretrained models cater to a global audience, supporting multiple languages and improving accessibility.

Natural language processing (NLP) is a fascinating field that combines linguistics, machine learning, and artificial intelligence to enable machines to understand and interpret human language. Through the use of various techniques and approaches such as machine learning, linguistics, deep learning, and advanced linguistic models, NLP has the potential to transform the way we interact with machines and comprehend language.

The preprocessing steps involved in text cleaning, such as text tokenization, stopword removal, stemming and lemmatization, noise removal, and handling missing data, are crucial for preparing textual data for analysis and modeling. These steps ensure that the data is clean and in a standardized format.

Text representation is another vital aspect of NLP, where techniques like Bag of Words, TF-IDF, word embeddings (such as Word2Vec and GloVe), document-term matrix, and character-level embeddings are employed to transform textual data into a format suitable for analysis and machine learning.

NLP models and algorithms are at the core of NLP, enabling various tasks and applications. Rule-based approaches, machine learning models, neural networks, transformer models, and pretrained language models all contribute to the advancement of NLP and its ability to understand and generate natural language.

As the field of NLP continues to evolve, new techniques and approaches will emerge, driven by advancements in technology and the availability of vast amounts of training data. With the potential to revolutionize industries and enhance communication between machines and humans, NLP is an exciting field with boundless possibilities.