Transformers are a type of machine learning model used in artificial intelligence and NLP. The technical details of transformer architecture are complicated.

Yet, these complicated technical details are what make transformers vital to the field of machine learning. Transformers are neural networks that use a technique called attention, which allows them to focus on specific parts of input data while disregarding other parts. This attention mechanism is what sets transformers apart from other neural networks, making them more efficient and effective when handling large and complex datasets.

One key aspect of transformers is their ability to process input data in parallel. Unlike traditional recurrent neural networks (RNNs), which process input data sequentially, transformers can process all the data simultaneously, greatly reducing the time required for training and inference. This is achieved by dividing the input data into sequences and processing them in parallel through individual attention mechanisms.

Another important aspect of transformers is their ability to handle variable-length input sequences. This is particularly useful when working with natural language processing (NLP) tasks since language input can be of varying lengths. This flexibility is achieved through the self-attention mechanism, which allows the model to understand the context of each word in a sentence by attending to all other words in that sentence.

Lastly, transformers are capable of generating new data. This is done through a process called decoding, which involves passing a seed sequence through the model and generating a sequence of new data. This is particularly useful for tasks such as language translation, where the model is trained on a source language and is expected to generate a target language output.

Overall, the technical details of transformers are complex, but they enable these machine learning models to be powerful tools for natural language processing and other AI applications. By understanding these technical details, researchers and engineers can continue to improve the capabilities of transformers, making them even more efficient and effective in dealing with complex datasets.

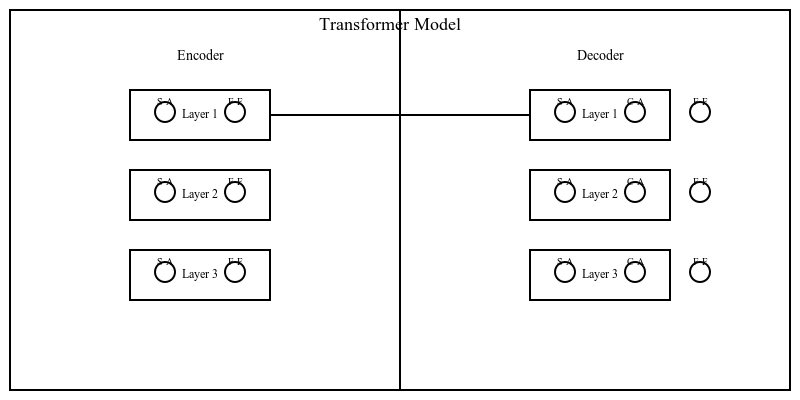

How a transformer works.

Transformers have various layers where data is transferred.